Deep Learning has impacted many fields including the healthcare industry. Particularly in radiology, deep learning models are used on data streaming pipelines to automate the report generation process.

This level of smart assistance has helped radiologists efficiently perform their day-to-day tasks and has introduced new challenges for the technology. Such challenges are faced during the ML model training phase in tracking varieties of experiments and during productionization in managing the life cycle information of ML models. In radiology, building different models for sagittal, coronal, and axial view images to predict matrices for pathology from the MRI data stored in DICOM format is difficult to track, record, and maintain.

Challenges in training ML models

One such example in radiology can be the detection of schmorl’s node in MRI Images. To perform this task on sagittal images, one first needs a model to accurately detect vertebrae, and another model to perform detection for schmorl’s node within each vertebra. Thus, the accuracy of schmorl’s node detection task, in this case, is dependent on the accuracy of the vertebra detection model. This is where MLfLow makes it possible to build multi-step workflows with separate vertebra detection and Schmorl’s node detection projects.

When the vertebra experiment has ended and one gets its output artifacts, these artifacts can then be passed into another step of Schmorl’s node detection using paths or URI parameters. Similarly, mapping the deployment lifecycle of trained vertebra detection and schmorl’s node detection models can also be done using MLflow.

Being productive with machine learning in such a scenario can therefore be challenging for several reasons:

- Difficulty in tracking large number of experiments

- Issues with code reproducibility

- Unstructured way of packaging code

- No centralized storage to manage lifecycle information ML models

MLflow and its components

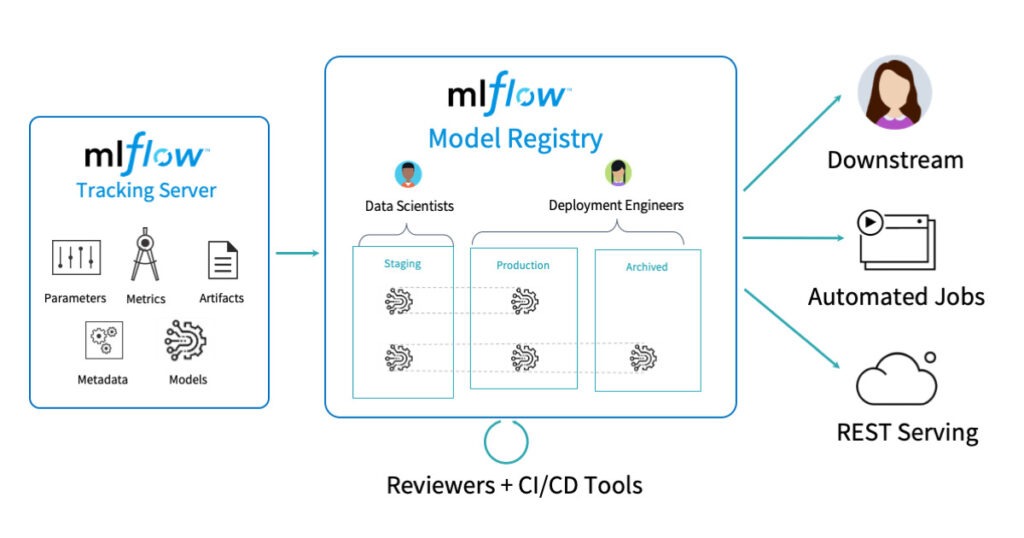

MLfLow is one such open source platform to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry.

MLfLow contains four components:

- MLfLow Tracking

- MLfLow Projects

- MLfLow Models

- MLfLow Registry

MLfLow Tracking component is an API and UI for logging parameters, code versions, metrics, and output files. This component is useful for later visualization of the ML experiment. Currently, Python, REST, R, and Java APIs are available. This component is very helpful when multiple experiments are needed to be performed, recorded, and required to be later compared against certain performance matrices to select the best ML model.

MLfLow Projects component is a format for packaging deep learning code in a reusable and reproducible way, based primarily on conventions.

MLfLow Model is a standard format for packaging machine learning models that can be used in tools like real-time serving through a REST API or batch inference on Apache Spark.

MLfLow Registry component is a centralized model store, set of APIs, and UI, to collaboratively manage the full lifecycle of an MLflow Model. This component plays a key role in keeping track of varieties of trained models with their life cycle information.

In MLflow one can also launch multiple experiments in parallel, and then a simple code can be written to either cancel an experiment, launch new experiments, or select the best performing experiment using the target metric.

Airflow vs Kubeflow vs. MLflow

Airflow is a generic task orchestration platform, It is a set of components and plugins for managing and scheduling tasks.

Kubeflow makes use of Kubernetes. Kubeflow lets you build a full DAG where each step is a Kubernetes pod.

MLflow is a Python library you can import into your existing machine learning code and can use to track and store the matrices for each ML experiment. MLFlow also has built-in functionality to deploy the trained models and manage their deployment lifecycle.

Steps to install MLflow in Linux:

- Steps to install MLFLow in Ubuntu: pip3 install mlflow

- Steps to start the MLFLow Server for Tracking UI accessible within the local system: mlflow ui –serve-artifacts

- Steps to start the MLFLow Server for Tracking UI accessible also from a remote system ( do replace x.x.x.x with the IP address): mlflow ui –host x.x.x.x –serve-artifacts